Some useful libraries¶

Fanny Ducel (CC BY-NC-SA) -- 2024

Before we start:

- If someone has "exam accomodations" ("tiers temps", "aménagements"), please send me an email or let me know somehow so that I can prepare what you need

- Let's go back on Wooclap to address some notions from last week.

This notebook corresponds to your seventh lecture and covers (clickable sources):

Unlike last week, these libraries are not pre-installed in your environment, so you may need to install them yourself:

pip install pandas in your terminal, or !pip install pandas directly in a notebook

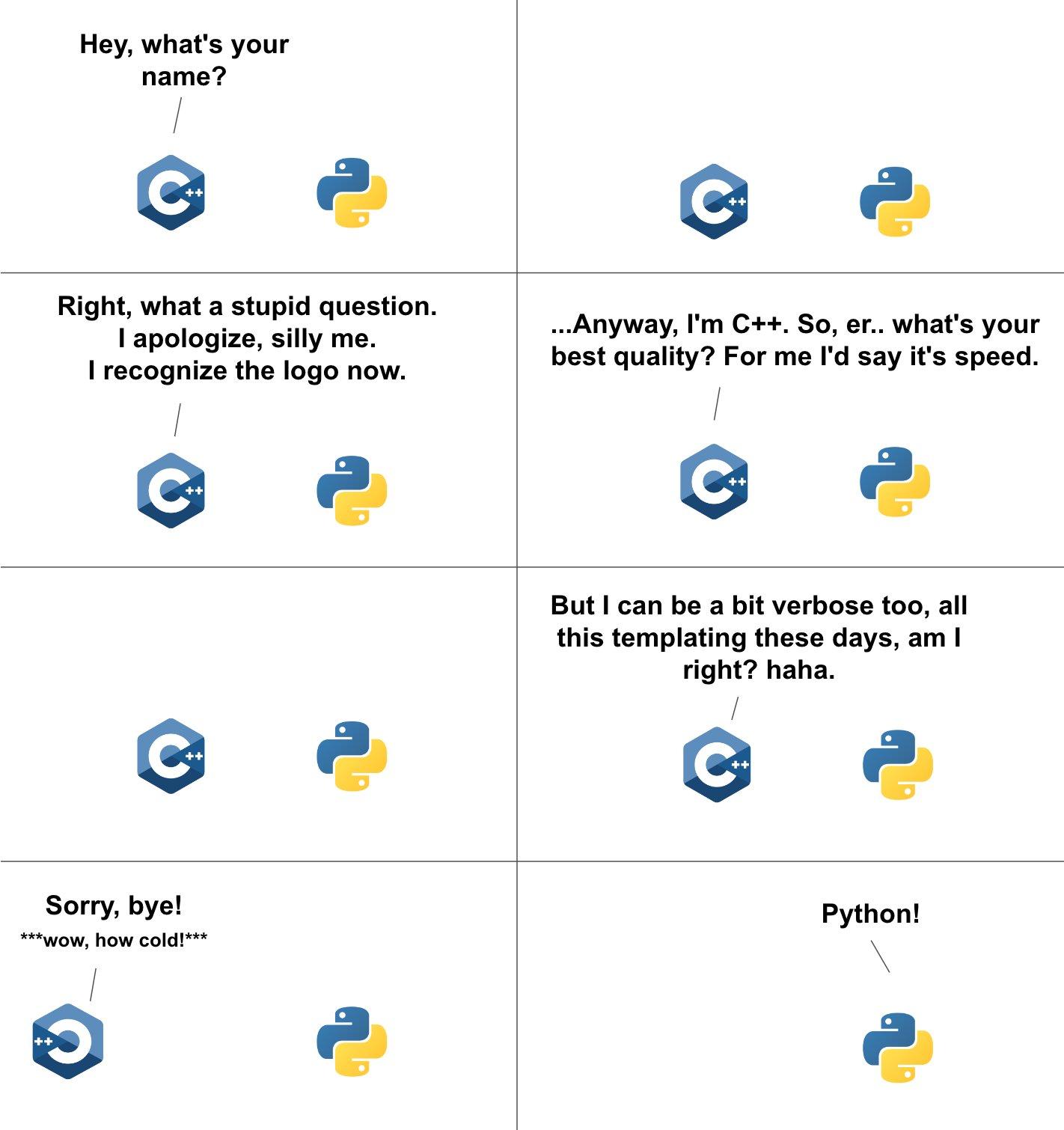

You'll see that some of these libraries are partially coded in C or C++. That makes them much more efficient and faster than Python.

Numpy¶

NumPy (Numerical Python) is a library used to work on arrays, in particular in data science.

It is much more efficient than the Python lists and 50 times faster, as it is partially coded in C and C++. It also takes less space in memory.

import numpy as np

arr = np.array([1, 2, 3, 4, 5])

print(arr, type(arr))

[1 2 3 4 5] <class 'numpy.ndarray'>

# You can create multi-dimensional or 0-D arrays:

arr_0d = np.array(84)

arr_1d = np.array([1, 2, 3, 4, 5])

arr_2d = np.array([[4, 1, 3], [6, 2, 3]])

arr_3d = np.array([[[1, 2, 3], [4, 5, 6]], [[1, 2, 3], [4, 5, 6]]])

arr_5d = np.array([1, 2, 3, 4], ndmin=5)

print(arr_2d)

# Check the number of dimensions

print(arr_2d.ndim)

# Check the shape of the array

print(arr_2d.shape)

[[4 1 3] [6 2 3]] 2 (2, 3)

# Like in lists, you can access array elements with indexes:

#print(arr_1d[2])

#print(arr_2d[1,1])

# You can also use slicing

#print(arr_1d[1:3])

# And for loops!

# TODO: how to go through an array with a for loop?

for row in arr_2d:

for number in row:

print(number)

4 1 3 6 2 3

# You can also search array

search = np.where(arr_1d == 4)

print(search)

print(np.sort(arr_2d))

(array([3]),) [[1 2 3] [4 5 6]]

Pandas¶

A library to analyze, clean, explore, manipulate data easily. Built on top of NumPy. Very useful for data science.

import pandas as pd

# You can convert a dict to a Dataframe

fruits = {

"labels" : ["apple", "pineapple", "mango", "banana"],

"prices" : [0.1, 0.8, 0.7, 0.2]

}

df_fruits = pd.DataFrame(fruits)

#df_fruits

#print(df_fruits.info())

print(df_fruits.loc[0])

labels apple prices 0.1 Name: 0, dtype: object

# Read and clean a CSV file

# Random file from https://www.data.gouv.fr/fr/datasets/effectifs-deleves-par-niveau-sexe-langues-vivantes-1-et-2-les-plus-frequentes-par-college-date-dobservation-au-debut-du-mois-doctobre-chaque-annee/

# df = pd.read_csv("fr-en-college-effectifs-niveau-sexe-lv.csv")

df = pd.read_csv("fr-en-college-effectifs-niveau-sexe-lv.csv", sep=";")

# Let's have a first look at it to see what it is about

#print(df.info())

#df

/tmp/ipykernel_13741/863499255.py:5: DtypeWarning: Columns (6) have mixed types. Specify dtype option on import or set low_memory=False.

df = pd.read_csv("fr-en-college-effectifs-niveau-sexe-lv.csv", sep=";")

# Let's clean it up!

df = df.dropna()

# or: df.fillna("Undetermined")

df = df.drop_duplicates()

# To check if a cell is "na"

#print(df['code_region'].isna())

# To check if a cell is NOT "na"

#print(df['code_region'].notna())

0 True

1 True

2 True

3 True

4 True

...

41752 True

41753 True

41754 True

41755 True

41756 True

Name: code_region, Length: 41727, dtype: bool

# Manipulate the data

interesting_col = "nombre_de_3emes_lv1_anglais"

print("Mean",df[interesting_col].mean())

print("Median",df[interesting_col].median())

print("Min",df[interesting_col].min())

print("Max",df[interesting_col].max())

print("Correlation",df[interesting_col].corr(df["code_region"]))

print(df.sort_values(by=interesting_col))

Mean 95.2630670788698

Median 95.0

Min 0

Max 505

Correlation 0.06624079122832621

num_ligne rentree_scolaire code_region region_academique \

30192 15279 2020 16 OCCITANIE

8037 40104 2023 16 OCCITANIE

2189 20812 2021 10 ILE-DE-FRANCE

13974 27423 2022 6 GRAND EST

22178 14822 2020 15 NOUVELLE-AQUITAINE

... ... ... ... ...

6230 894 2019 1 AUVERGNE-RHONE-ALPES

5760 38664 2023 13 MAYOTTE

4940 34282 2023 1 AUVERGNE-RHONE-ALPES

15971 17570 2021 1 AUVERGNE-RHONE-ALPES

27558 9227 2020 1 AUVERGNE-RHONE-ALPES

code_aca academie code_dept departement code_postal \

30192 16 TOULOUSE 031 HAUTE-GARONNE 31750

8037 11 MONTPELLIER 034 HERAULT 34000

2189 24 CRETEIL 093 SEINE-SAINT-DENIS 93200

13974 12 NANCY-METZ 057 MOSELLE 57620

22178 13 POITIERS 086 VIENNE 86036

... ... ... ... ... ...

6230 10 LYON 069 RHONE 69321

5760 43 MAYOTTE 976 MAYOTTE 97660

4940 10 LYON 069 RHONE 69321

15971 10 LYON 069 RHONE 69321

27558 10 LYON 069 RHONE 69321

commune ... nombre_de_3emes_garcons nombre_de_3emes_lv1_allemand \

30192 ESCALQUENS ... 0 0

8037 MONTPELLIER ... 0 0

2189 SAINT-DENIS ... 0 0

13974 LEMBERG ... 30 73

22178 POITIERS ... 0 0

... ... ... ... ...

6230 LYON ... 247 0

5760 DEMBENI ... 212 0

4940 LYON ... 250 0

15971 LYON ... 255 0

27558 LYON ... 275 0

nombre_de_3emes_lv1_anglais nombre_de_3emes_lv1_espagnol \

30192 0 0

8037 0 0

2189 0 0

13974 0 0

22178 0 0

... ... ...

6230 476 0

5760 478 0

4940 485 0

15971 504 0

27558 505 0

nombre_de_3emes_lv1_autres_langues nombre_de_3emes_lv2_allemand \

30192 0 0

8037 0 0

2189 0 0

13974 0 0

22178 0 0

... ... ...

6230 0 118

5760 0 0

4940 0 125

15971 0 132

27558 0 95

nombre_de_3emes_lv2_anglais nombre_de_3emes_lv2_espagnol \

30192 0 0

8037 0 0

2189 0 0

13974 73 0

22178 0 0

... ... ...

6230 0 313

5760 0 447

4940 0 322

15971 0 324

27558 0 347

nombre_de_3emes_lv2_italien nombre_de_3emes_lv2_autres_langues

30192 0 0

8037 0 0

2189 0 0

13974 0 0

22178 0 0

... ... ...

6230 45 0

5760 0 31

4940 38 0

15971 48 0

27558 63 0

[41727 rows x 80 columns]

# Create filters

filter_year = df[df['rentree_scolaire'] < 2020]

#print(filter_year)

filter_academie = df[df['academie'] == "NANCY-METZ"]

#filter_academie

filter_german = df[df['nombre_de_3emes_lv1_allemand'] > df['nombre_de_3emes_lv1_anglais']]

# filter_german

# Combine conditions (notice the syntax with brackets and '&' or '|')

filter_nancygerman = df[(df['academie'] == "NANCY-METZ") | (df['nombre_de_3emes_lv1_allemand'] > df['nombre_de_3emes_lv1_anglais'])]

#filter_nancygerman

# Visualize

import matplotlib.pyplot as plt

df.plot(kind = 'scatter', x = 'region_academique', y = 'nombre_de_3emes_lv1_allemand')

plt.xticks(rotation=90)

plt.show()

df[interesting_col].plot(kind='hist')

<Axes: ylabel='Frequency'>

Spacy¶

For NLP: tokenization, POS tagging, dependency parsing, lemmatization, sentence boundary detection, NER, entity linking, similarity, text classification, rule-based matching, training, ...

Using trained models and pipelines for many languages. Some languages have transformer-based models (https://spacy.io/models):

python -m spacy download en_core_web_sm (or with a "!" in front if in a notebook)

Note (NLP students) : For French, models are partially based on UD_French-Sequoia, a project featuring Bruno Guillaume!

import spacy

nlp = spacy.load("en_core_web_sm")

# From Wikipedia

pythonidae = nlp("The Pythonidae, commonly known as pythons, are a family of over 40 nonvenomous snakes found in Africa, Asia, and Australia.")

print("TEXT, LEMMA, POS, TAG, DEP")

for token in pythonidae:

print(token.text, token.lemma_, token.pos_, token.tag_, token.dep_)

TEXT, LEMMA, POS, TAG, DEP The the DET DT det Pythonidae Pythonidae PROPN NNP nsubj , , PUNCT , punct commonly commonly ADV RB advmod known know VERB VBN acl as as ADP IN prep pythons python NOUN NNS pobj , , PUNCT , punct are be AUX VBP ROOT a a DET DT det family family NOUN NN attr of of ADP IN prep over over ADP IN quantmod 40 40 NUM CD nummod nonvenomous nonvenomous ADJ JJ amod snakes snake NOUN NNS pobj found find VERB VBN acl in in ADP IN prep Africa Africa PROPN NNP pobj , , PUNCT , punct Asia Asia PROPN NNP conj , , PUNCT , punct and and CCONJ CC cc Australia Australia PROPN NNP conj . . PUNCT . punct

from spacy import displacy

displacy.render(pythonidae, style="dep")

for ent in pythonidae.ents:

print(ent.text, ent.start_char, ent.end_char, ent.label_)

over 40 59 66 CARDINAL Africa 95 101 LOC Asia 103 107 LOC Australia 113 122 GPE

displacy.render(pythonidae, style="ent")

python = nlp("Python is a programming language that we are currently learning.")

master = nlp("I am a master's students , I study computer science and programming among other things")

# Similarity of two documents

print(python.similarity(master))

print(python.similarity(pythonidae))

print(pythonidae.similarity(master))

0.40341952443122864 0.22934463620185852 0.424297958612442

/tmp/ipykernel_13741/1486275565.py:5: UserWarning: [W007] The model you're using has no word vectors loaded, so the result of the Doc.similarity method will be based on the tagger, parser and NER, which may not give useful similarity judgements. This may happen if you're using one of the small models, e.g. `en_core_web_sm`, which don't ship with word vectors and only use context-sensitive tensors. You can always add your own word vectors, or use one of the larger models instead if available. print(python.similarity(master)) /tmp/ipykernel_13741/1486275565.py:7: UserWarning: [W007] The model you're using has no word vectors loaded, so the result of the Doc.similarity method will be based on the tagger, parser and NER, which may not give useful similarity judgements. This may happen if you're using one of the small models, e.g. `en_core_web_sm`, which don't ship with word vectors and only use context-sensitive tensors. You can always add your own word vectors, or use one of the larger models instead if available. print(python.similarity(pythonidae)) /tmp/ipykernel_13741/1486275565.py:9: UserWarning: [W007] The model you're using has no word vectors loaded, so the result of the Doc.similarity method will be based on the tagger, parser and NER, which may not give useful similarity judgements. This may happen if you're using one of the small models, e.g. `en_core_web_sm`, which don't ship with word vectors and only use context-sensitive tensors. You can always add your own word vectors, or use one of the larger models instead if available. print(pythonidae.similarity(master))

🥳 YOUR TURN: Compute the similarity between the 3rd word from the text python, and the 9th word from master

# CODE ME

python_ = "Pythons are snakes..."

p3 = python[3]

m9 = master[9]

print(p3, m9)

print(p3.similarity(m9))

programming computer 0.5470353960990906

/tmp/ipykernel_13741/777209842.py:6: UserWarning: [W007] The model you're using has no word vectors loaded, so the result of the Token.similarity method will be based on the tagger, parser and NER, which may not give useful similarity judgements. This may happen if you're using one of the small models, e.g. `en_core_web_sm`, which don't ship with word vectors and only use context-sensitive tensors. You can always add your own word vectors, or use one of the larger models instead if available. print(p3.similarity(m9))

tqdm¶

A library to display progress bars for Python loops

from tqdm import tqdm

import time

# The syntax is the following: for word in tqdm(word_list)

🥳 YOUR TURN: Write a loop that prints numbers from 1 to 20, with a break of 0.1 sec in between each iteration

for i in tqdm(range(1,21)):

print(i)

time.sleep(1)

0%| | 0/20 [00:00<?, ?it/s]

1

5%|██▏ | 1/20 [00:01<00:19, 1.00s/it]

2

10%|████▍ | 2/20 [00:02<00:18, 1.00s/it]

3

15%|██████▌ | 3/20 [00:03<00:17, 1.00s/it]

4

20%|████████▊ | 4/20 [00:04<00:16, 1.00s/it]

5

25%|███████████ | 5/20 [00:05<00:15, 1.00s/it]

6

30%|█████████████▏ | 6/20 [00:06<00:14, 1.00s/it]

7

35%|███████████████▍ | 7/20 [00:07<00:13, 1.00s/it]

8

40%|█████████████████▌ | 8/20 [00:08<00:12, 1.00s/it]

9

45%|███████████████████▊ | 9/20 [00:09<00:11, 1.00s/it]

10

50%|█████████████████████▌ | 10/20 [00:10<00:10, 1.00s/it]

11

55%|███████████████████████▋ | 11/20 [00:11<00:09, 1.00s/it]

12

60%|█████████████████████████▊ | 12/20 [00:12<00:08, 1.00s/it]

13

65%|███████████████████████████▉ | 13/20 [00:13<00:07, 1.00s/it]

14

70%|██████████████████████████████ | 14/20 [00:14<00:06, 1.00s/it]

15

75%|████████████████████████████████▎ | 15/20 [00:15<00:05, 1.00s/it]

16

80%|██████████████████████████████████▍ | 16/20 [00:16<00:04, 1.00s/it]

17

85%|████████████████████████████████████▌ | 17/20 [00:17<00:03, 1.00s/it]

18

90%|██████████████████████████████████████▋ | 18/20 [00:18<00:02, 1.00s/it]

19

95%|████████████████████████████████████████▊ | 19/20 [00:19<00:01, 1.00s/it]

20

100%|███████████████████████████████████████████| 20/20 [00:20<00:00, 1.00s/it]

matplotlib¶

To plot graphs, useful for visualization.

import matplotlib.pyplot as plt

import numpy as np

# Let's make a basic, random plot

xpoints = np.array([2, 5, 12, 24])

ypoints = np.array([0, 15, 2, 8])

plt.plot(xpoints, ypoints)

plt.savefig("my_plot.pdf")

plt.show()

# Notice the syntax difference for one or multiple lines

plt.plot(xpoints)

plt.plot(ypoints)

plt.show()

# Let's make it prettier/more interesting (endless possibilities/options!)

#plt.plot(xpoints, ypoints, marker="o")

#plt.plot(xpoints, ypoints, linestyle = 'dotted')

plt.plot(xpoints, ypoints, color= 'r', linewidth = '10', linestyle="dotted", marker="o")

plt.xlabel("Potato")

plt.ylabel("Time")

#plt.grid()

plt.grid(axis='x')

plt.show()

# More plot types!

plt.scatter(xpoints, ypoints)

plt.show()

plt.bar(["A", "B", "C", "D"], xpoints, width=0.1)

plt.show()

plt.bar(["A", "B", "C", "D"], xpoints), #height=0.1

plt.show()

# Note: be careful when plotting, try to make it color-blind friendly for greater inclusivity!

plt.style.use('tableau-colorblind10')

y = np.array([35, 25, 25, 15])

mylabels = ["Apples", "Bananas", "Cherries", "Dates"]

plt.pie(y, labels = mylabels)

plt.legend(title = "Four Fruits:", loc="upper right")

plt.show()

🥳 YOUR TURN: Create a dictionary with any data you'd like inside and make a plot out of it (any kind of plot you'd like)!

That's all for today! Any questions?